Headlines about artificial intelligence (AI) are inescapable on Earth, ranging from celebrity deepfakes to breakthroughs in fundamental science. Now, it seems even Mars is no longer a haven from AI’s ubiquitous presence. On Dec. 8 and 10, NASA’s Perseverance Rover was guided across 1,496 feet (455.9 meters) of Martian surface on a route planned and executed entirely by a “vision language model” — a form of generative AI capable of deciphering videos and images along with text.

“Autonomous technologies like this can help missions to operate more efficiently, respond to challenging terrain, and increase science return as distance from Earth grows,” NASA administrator Jared Isaacman said in a press release.

A complex human process

Mars is roughly 140 million miles away from Earth, which makes driving the six-wheeled Perseverance rover a complex task. Due to the distance, there is a 20-minute lag between input from the engineers on Earth and the rover’s output. This means the vehicle cannot be controlled in real time. Instead, the team at NASA’s Jet Propulsion Laboratory (JPL) Rover Operations Center (ROC) carefully plans a route ahead of time, sends the directions to Perseverance, and waits for the results. Perseverance does have some wiggle room to deviate from its commands, thanks to its AutoNav system that can evaluate the terrain directly in front of the rover for unexpected hazards.

This level of caution is born from experience; on Mars, the stakes are high. In 2009, one of Perseverance’s predecessors, the Spirit rover, got stuck in soft sand. Despite months of effort to wriggle free, the rover remained until it eventually lost power, ending its mission prematurely. To avoid a similar fate, human operators use all the data at their disposal (images from space, onboard cameras, and 3D terrain maps) to lay out a series of waypoints. These waypoints are set no more than 330 feet apart, where the rover stops and waits for new instructions.

Handing over the keys

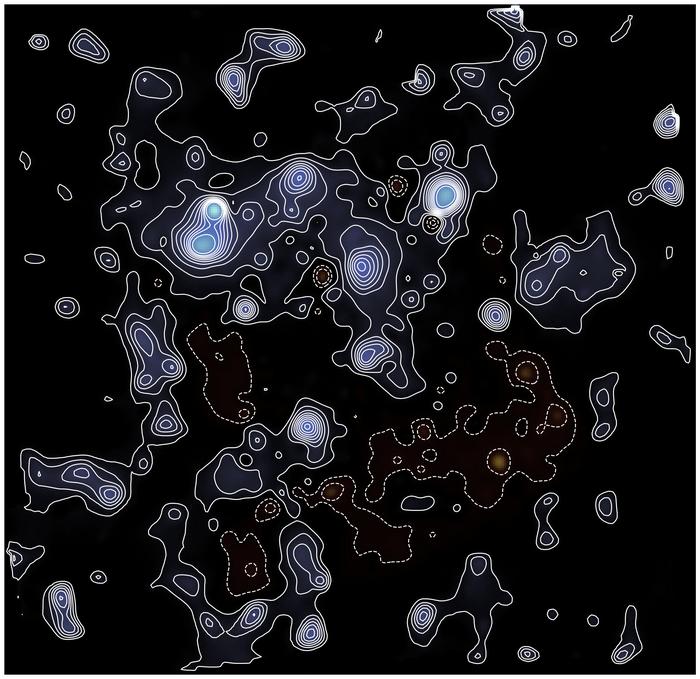

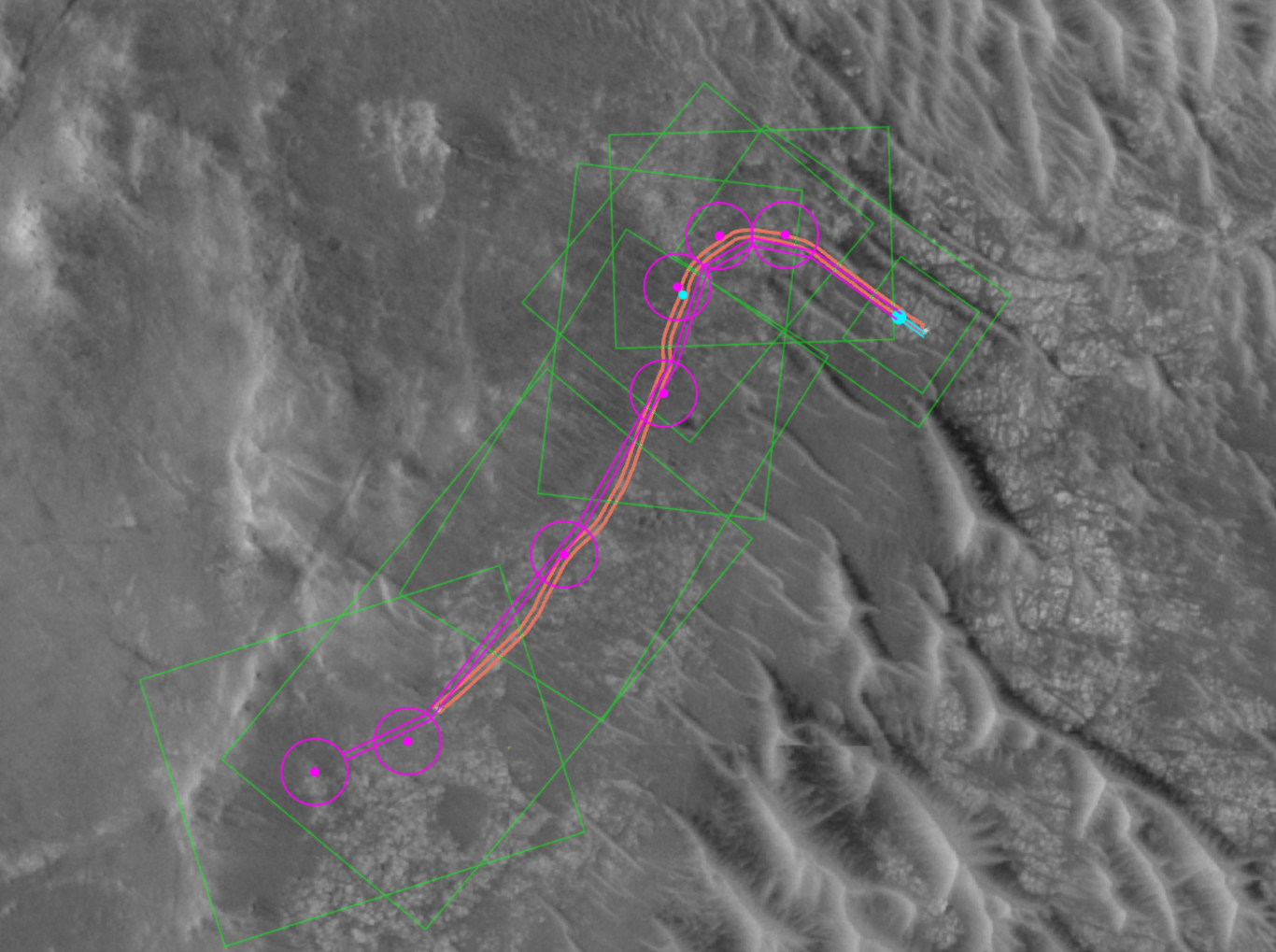

During the rover’s operations on Dec. 8 and 10, the ROC team, in collaboration with AI company Anthropic, sent the rover a route completely planned by a generative AI that used Anthropic’s AI model known as Claude. The AI used imaging from the HiRISE (High Resolution Imaging Science Experiment) camera on the Mars Reconnaissance Orbiter and other data gathered from the martian surface to plot a series of waypoints that avoided potential hazards.

Planning this drive wasn’t as simple as asking a chatbot to write an email. The JPL team utilized an interface called Claude Code — a tool aimed at software developers — to upload a large amount of previous mission data. This gave the AI the context it needed to understand the martian surface. Claude then used its vision capabilities to analyze the data and stitch together several roughly 32-foot (10-meter) segments separated by waypoints. The model reviewed its work, edited the waypoints for safety, and finally converted the entire plan into “Rover Markup Language” — the specialized coding language NASA uses to communicate with its deep-space hardware.

Reviewing the route

To verify the AI’s work, the ROC team ran these commands through a rigorous simulation involving a virtual version of the rover and over 500,000 variables to predict any hazards along its route. The team made a few manual tweaks, then the finalized directions were sent through NASA’s Deep Space Network to Perseverance. On Dec. 8, the rover drove 689 feet (210 meters), guided by Claude’s route. On Dec. 10, it continued an additional 807 feet (245.9 meters), bringing the total trip length to 1,496 feet (455.9 meters).

While it may not seem like much, engineers are hopeful that these trips will eventually get longer until AI can take over the time-consuming task of pathfinding. This shift could allow greater focus on analyzing data from the rover’s ongoing discoveries. Just last year, Perseverance detected electric sparks within Martian dust devils, providing evidence of static electricity in the atmosphere. Additionally, the rover identified a potential biosignature at a site called “Cheyava Falls,” where a rock sample showed organic compounds and chemical markers that could be related to ancient microbial life.

By automating navigation, NASA aims to optimize the time spent on these scientific priorities. As Vandi Verma, a space roboticist at JPL, explained in the press release, “We are moving towards a day where generative AI and other smart tools will help our surface rovers handle kilometer-scale drives while minimizing operator workload, and flag interesting surface features for our science team by scouring huge volumes of rover images.”