This month, Apple released the iPhone 14 Pro with a 48-megapixel camera — the highest resolution camera ever in an iPhone. However, the camera also has the flexibility to produce a 12-megapixel image. With this latest generation of iPhones, Apple joins most leading Android smartphones that already have this feature, giving shutterbugs the option to take a high-resolution image with more detail or a lower-resolution image but with greater sensitivity in low-light conditions.

The key to this function that has suddenly become an industry standard is a technique called pixel binning.

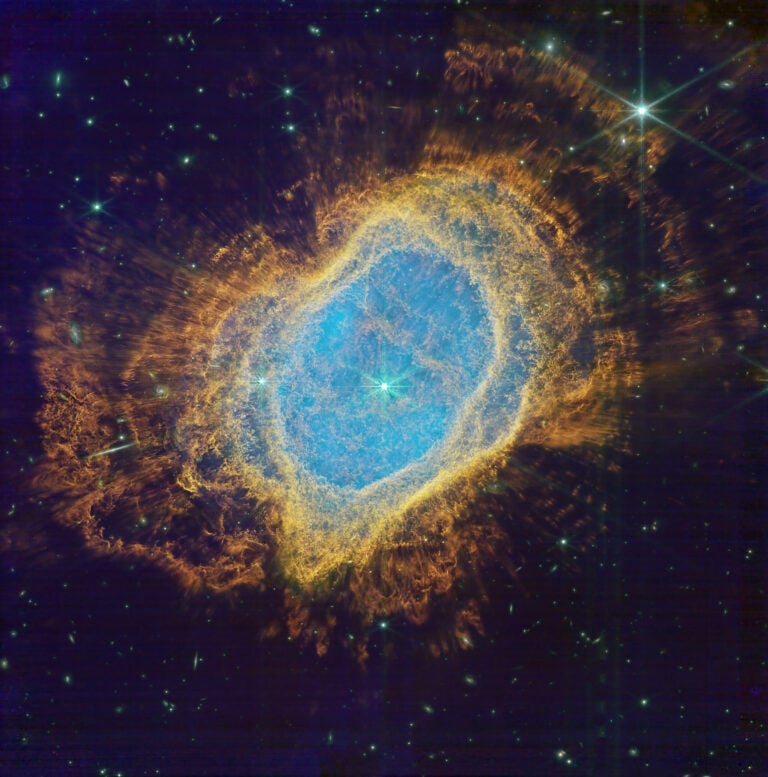

Pixel binning is often described as an emerging technology — if you’re looking just at smartphones, some point to the Nokia 808 PureView from 2012 as the first to implement it. But in truth, pixel binning goes back much further than that. It’s an image processing technique that dates to the beginning of digital imaging, and one that astronomers and astroimagers have used for decades.

What is pixel binning?

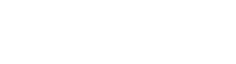

At the most basic level, “binning” any sort of data means taking neighboring values and grouping them together. For example, shoe sizes are a way of “binning” the distribution of sizes of feet to a manageable number of sizes to manufacture. A histogram represents a distribution of values by putting data points within a certain interval into “bins” to produce a chart that shows the most common ranges of values.

Conceptually, pixel binning is similar: A camera sensor takes multiple pixels — for instance, a 2×2 square of adjacent pixels — and counts the amount of light they record as if they were one pixel.

Pixel binning introduces a tradeoff: You lose resolution, but gain sensitivity. Binning an entire camera sensor’s pixel grid into 2×2 groups will reduce the image resolution by a factor of two and the total number of effective pixels by a factor of four. But in return, each superpixel is effectively four times as sensitive to light — making binning especially useful for low-light situations like astrophotography. Like telescopes, pixels are “light buckets”: The larger they are, the more light they collect.

In reality, the gain in quality of the eventual image is not quite as high as that factor of four might suggest. That’s because grouping the pixels together also increases the amount of stray (dark) current that the sensor reads.

There are also differences in how different types of sensors read out an image that can affect the benefits of pixel binning. Charge-coupled devices, or CCDs, can perform binning at the same time as they read out a line of pixels. Newer CMOS (complementary metal-oxide-semiconductor) sensors — which are now nearly universal in consumer cameras — read out each pixel individually before binning, which adds a bit of read noise to each pixel and slightly degrades the quality. But still, regardless of your type of sensor, pixel binning can be a tradeoff worth making.

In other words, pixel binning isn’t smoke and mirrors — fundamentally, it’s simply addition and statistics.

So what’s the big deal? Why hasn’t pixel binning been more common?

For decades, astrophotographers using dedicated astroimaging cameras have been able to turn pixel binning on and off as a toggle in their camera or software settings.

So why haven’t consumer cameras offered pixel binning until recently?

The answer is that astroimaging cameras are traditionally monochrome cameras, and consumer cameras are color — and pixel binning becomes more complicated with color cameras due to how they capture color data.

Traditionally, astrophotographers would create a color image by combining images taken with a red filter, a green filter, and a blue filter. But since a filter wheel isn’t exactly a practical accessory for an everyday camera, most cameras capture color data by embedding a pattern of red, green, and blue color filters that covers every photosite (or pixel) on the image sensor.

Put another way, all color cameras are monochrome cameras — underneath their filter arrays.

Until recently, nearly every color digital camera used alternating red/green and blue/green lines that form a checkerboard-like pattern called a Bayer array. (A notable exception is Fujifilm’s popular X line of mirrorless cameras, which use an unconventional 6×6 pattern.) The Bayer array is named for Bryce Bayer, who invented it while working for Kodak in the 1970s.

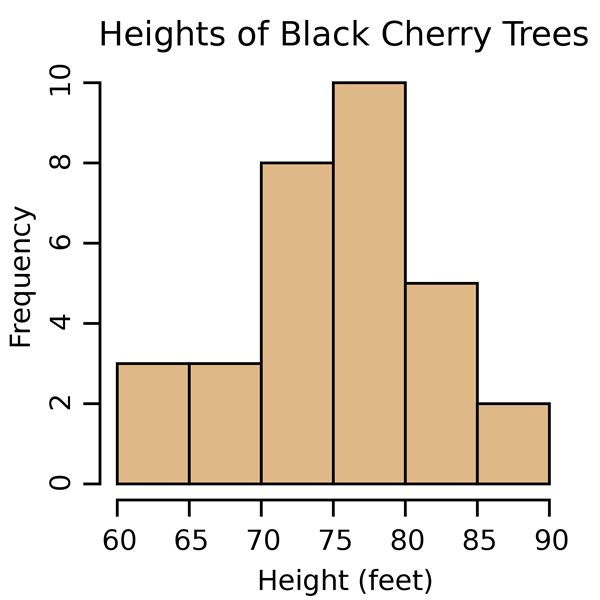

In a Bayer array, every 2×2 group of pixels contains two green pixels, situated diagonally from each other, and one red and one blue pixel. To determine the color at a particular point in the image, the camera’s internal processor samples surrounding pixels to interpolate full RGB color data for each pixel in a process called demosaicing.

But a Bayer array makes pixel binning much more complicated. To do so, the camera would normally sum the data from each red, green (2x), and blue grid into one value — but that throws away the color data, resulting in a monochrome image. A more complicated algorithm would be necessary to bin each color separately, and this introduces artifacts and significantly negates the gain in sensitivity.

To enable color cameras to pixel bin more effectively, camera sensor manufacturers have rearranged the entire grid of filters into a pattern called a quad-Bayer array (or quad-pixels, in Apple’s preferred terminology). In this pattern, each 2×2 group is the same color, forming a larger Bayer pattern with four pixels in each superpixel.

This way, when cameras bin each 2×2 group, they can preserve the color data and demosaic the image of superpixels just like a standard Bayer filter image.

The quad-Bayer pattern also brings other options for smartphone makers, like in taking high dynamic range (HDR) photos. Typically, an HDR image requires taking successive photos with different exposures to gain more detail in highlights and shadows. But the lag between exposures can produce blurring and ghosting artifacts. With a quad-Bayer array, each of the four pixels in a superpixel can have different exposure settings, allowing up to four images with different exposures to be taken simultaneously.

Is there a catch?

A quad-Bayer pattern does introduce a compromise: It isn’t optimized for the full-size images (48 megapixels for the iPhone 14 Pro and many Android phones), and processing them requires a more complicated demosaicing algorithm. So, in principle, the quality of a 48-megapixel quad-Bayer image will not be as good as a 48-megapixel image with a traditional Bayer array. However, clever demosaicing algorithms can still bring impressive results.

It’s also worth noting that, all else being equal, binning a 48-megapixel sensor down to a 12-megapixel image doesn’t produce better performance than an actual 12-megapixel sensor of the same size. Apple claims its iPhone 14 Pro sensor performs 2.5 times better than the iPhone 13 Pro. While it is typically cagey about details, physics tells us that that performance gain isn’t coming from pixel binning — it’s coming from the iPhone 14 Pro sensor being larger overall than the previous generation, as well as improvements to how its camera software processes the images. The benefit of a 48-megapixel sensor with pixel binning is the flexibility — being able to have a high-resolution image in scenes where there is plenty of light, while also preserving sensitivity in 12-megapixel images.

In a way, it’s only fitting to see a technique that astroimagers are familiar with make its way to consumer technology. After all, it was astronomy, perhaps more than any other field, that drove the development of CCDs. And it was a team at NASA’s Jet Propulsion Lab that invented the CMOS imaging technology, which now underlies nearly every consumer camera today, including those in phones.

So when you switch pixel binning modes on your phone, or take any digital picture at all, you can know that astronomy has helped to put that technology in the palm of your hand.